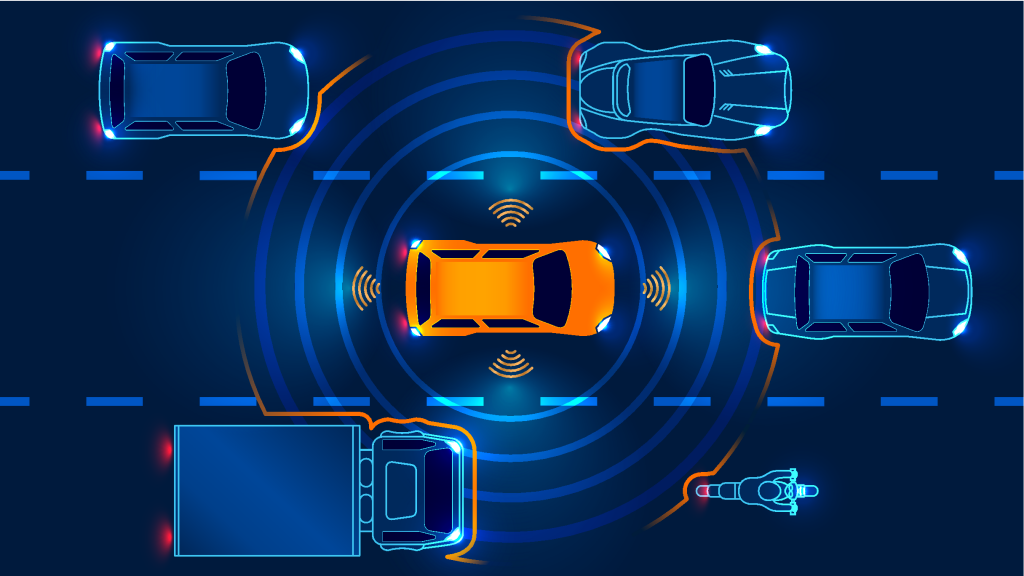

Fig 1 – Autonomous Vehicle Perceiving Its Surroundings

Currently, the auto industry is undergoing an evolution to vehicle autonomy. And with the emergence of technology companies in this sector, innovation is happening at an unprecedented rate. Currently, vehicles are equipped with partial autonomy capabilities – specifically, they are able to maneuver on their own in highway settings (in the presence of a human driver).

The next logical step will autonomous driving in urban settings (again, in the presence of a human driver). However, manoeuvring in cities is a complex, and thus expensive, problem to solve. Autonomous vehicles (AV) must take into account a plethora of new variables, including traffic lights, vulnerable road users, and constant changes in speed and traffic density. As a result, the industry has hit a barrier with highway autonomy – there is simply nothing on the market today that can enable urban vehicle autonomy.

A major reason as to why the industry is stuck at highway autonomy is due to the current unscalable sensor configuration that is driving in cities. Presently, to capture image data, eight monochromatic cameras are mounted on various places around the car. Given that there need to be two sources of depth information – along with a RADAR adjacent to each image sensor, there must be a single LiDAR integrated onto the car. The total cost all of this, mostly due to the costly LiDAR, is around $8000.

Alternatively, one can replace the monochromatic cameras with stereoscopic image sensors, which also act as sources of depth information. As a result, the costly LiDAR becomes obsolete – it can be eliminated. With solely stereo cameras being used in conjunction with the same RADAR configuration elaborated upon above, the total cost of the system is much cheaper than the current, unscalable configuration. This newfound scalability will allow car OEMs to make the smooth transition to urban autonomy as the industry evolves, allowing them to be competitive in the long run.

Unfortunately, today, there is no platform capable of processing stereoscopic image data. Solutions in cars today are based on legacy technology originally developed for different use cases. Because of their constraints, they are not purpose-built for the monumental task of self-driving. A novel product must be integrated into vehicles to allow them to process stereoscopic vision, therefore enabling advanced autonomous capabilities in a scalable manner.

To process image data from stereoscopic cameras, an AV must be equipped with a platform that generates a minimum of 75 Tera-Operations-Per-Second (TOPS) per watt of power consumption. We @ Recogni are developing a solution with such capabilities. Through leveraging key innovations in ASIC architecture, AI, and mathematics, our product has unmatched capabilities, at 100 TOPS per watt. As a result, we can enable urban autonomy in a practical manner through our ability to support the functioning of stereoscopic image sensors. OEMs should integrate our product into their vehicles to adapt with the evolving market and enable urban autonomy.

To learn more about Recogni, check out www.recogni.com